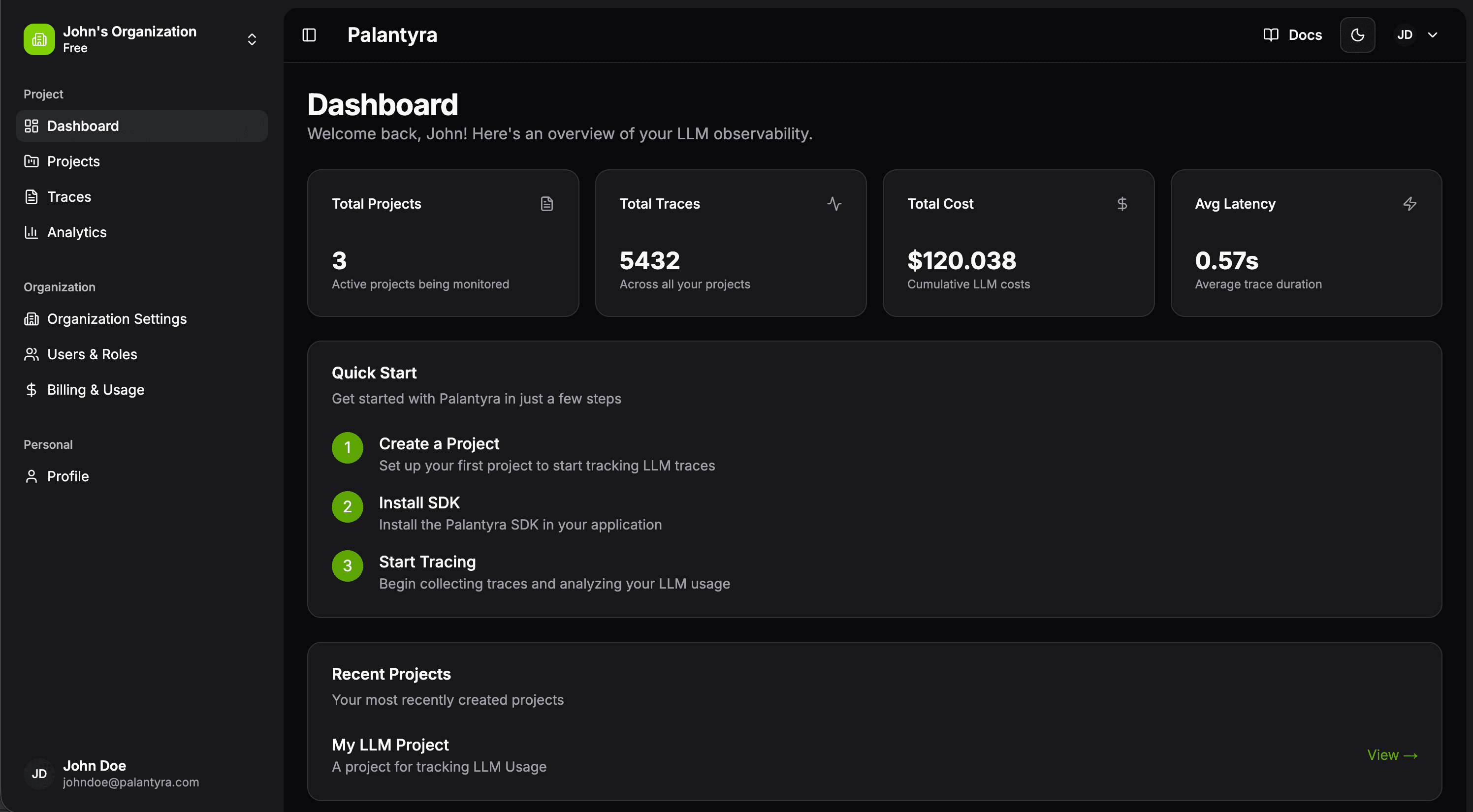

Complete AI Observability for LLM Applications

Monitor, trace, and optimize your LLM applications with automatic cost tracking, performance analytics, and real-time insights across all providers.

Monitor, trace, and optimize your LLM applications with automatic cost tracking, performance analytics, and real-time insights across all providers.

Just initialize once and all your LLM calls are automatically traced with costs, performance metrics, and errors.

Monitor costs, track performance, and analyze your LLM applications with real-time insights, automatic tracing, and comprehensive analytics across all providers.

Works with OpenAI, Anthropic, DeepSeek, and any provider using the OpenAI SDK format. Unified monitoring across all.

API calls traced across all providers

Real-time cost calculation for all providers

Track individual call costs

Compare costs across models

Aggregate costs per user

Monitor latency, throughput, and performance metrics. Get insights into bottlenecks and optimization opportunities.

Automatic error detection with real-time alerts and detailed categorization for quick resolution.

Start free and scale with your needs. Choose the plan that fits your AI observability requirements.

Perfect for getting started with AI observability and testing the platform

Best value for growing AI applications that need more advanced features

Advanced plan with enhanced security and unlimited access for large teams

made with love by tanmay sharma